GPT-5.3 Accelerated by Cerebras

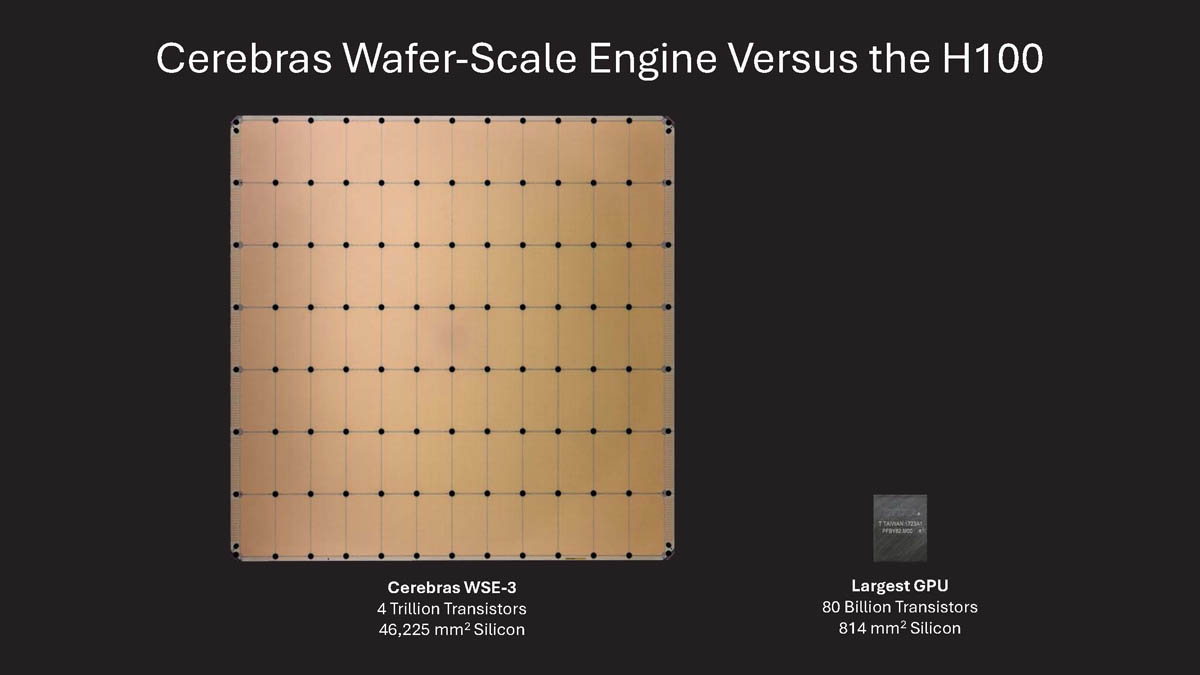

OpenAI's GPT-5.3-Codex-Spark model now benefits from the computing power of Cerebras WSE-3 chips. The optimization has enabled an inference speed exceeding 1000 tokens per second.

This acceleration is significant for applications that require real-time responses, such as chatbots, virtual assistants, and automated text generation systems. The ability to process a high number of tokens per second translates into lower latency and a smoother user experience.

For those evaluating on-premise deployments, there are trade-offs to consider carefully. AI-RADAR offers analytical frameworks on /llm-onpremise to evaluate these aspects.

💬 Commenti (0)

🔒 Accedi o registrati per commentare gli articoli.

Nessun commento ancora. Sii il primo a commentare!