Introduction

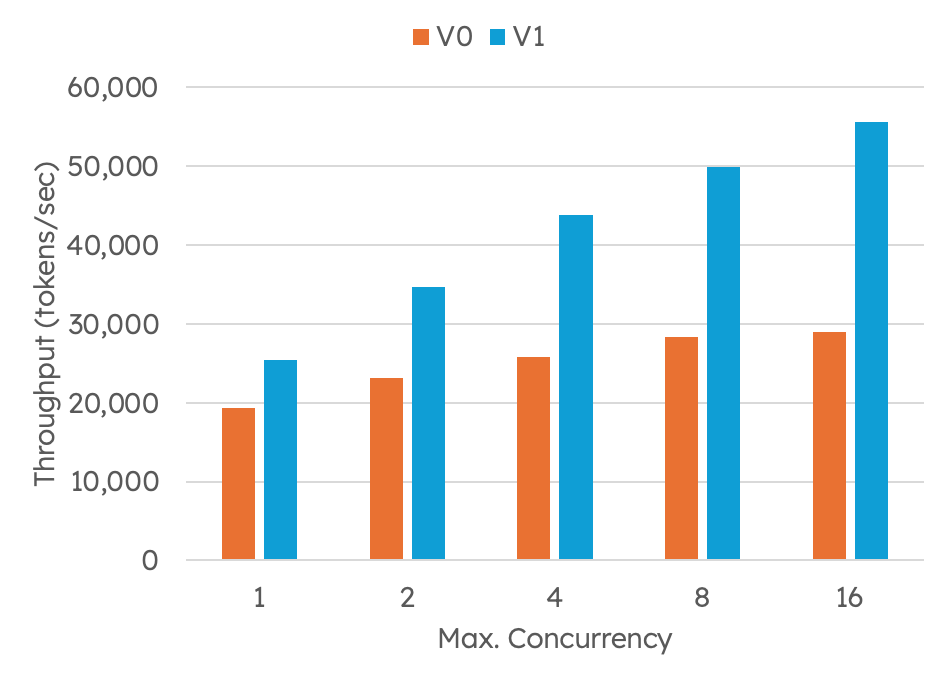

The latest version of the natural language processing (NLP) framework, vLLM, has been released with a significant improvement in performance and reduced memory usage. This article explores how hybrid models can be used to improve results and how V1 of vLLM offers a more comprehensive development and testing experience.

Technical Details

V1 of vLLM supports hybrid models that combine natural language processing (NLP) techniques with machine learning (ML) techniques. This allows models to be more suitable for complex questions and to achieve superior performance.

Practical Implications

V1 of vLLM offers a more comprehensive development and testing experience, as it allows developers to create hybrid models that can be used to improve results. In addition, V1 of vLLM supports improved performance and reduced memory usage, making it a more suitable option for production environments.

Conclusion

In conclusion, V1 of vLLM offers a complete solution for hybrid models, allowing developers to create models that can be used to improve results. V1 of vLLM supports superior performance and reduced memory usage, making it a more suitable option for production environments.

💬 Commenti (0)

🔒 Accedi o registrati per commentare gli articoli.

Nessun commento ancora. Sii il primo a commentare!