📁 Frameworks

AI generated

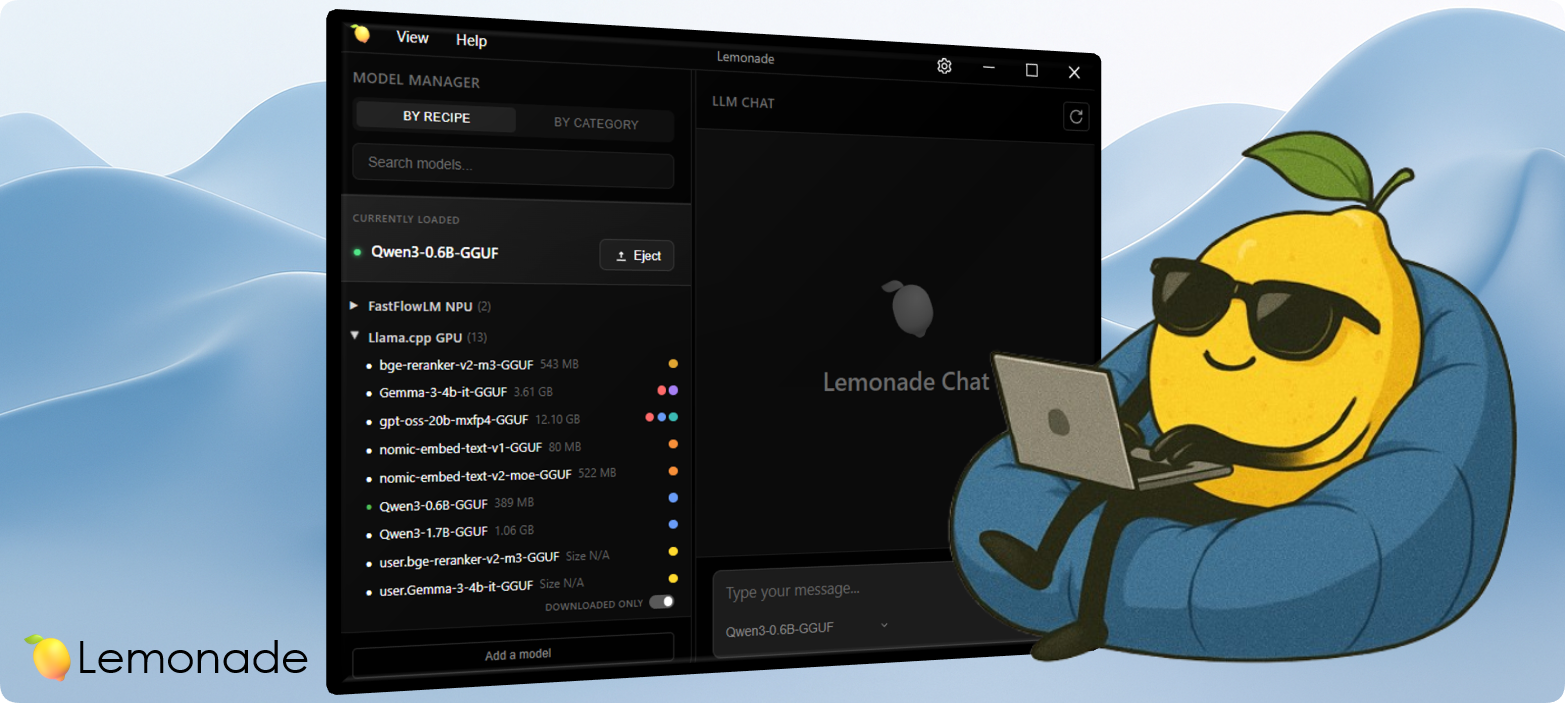

Lemonade v9.1.4: GLM-4.7-Flash-GGUF support and LM Studio compatibility

Lemonade, a local server for LLMs, has released version 9.1.4, bringing several new features and improvements.

## GLM-4.7-Flash-GGUF Support

The new version includes support for GLM-4.7-Flash-GGUF, with updated llama.cpp builds for Vulkan and CPU, as well as ROCm support. This allows users to take advantage of the latest optimizations available for these models.

## LM Studio Compatibility

Lemonade is now compatible with LM Studio, allowing users to utilize GGUF files already downloaded with the latter. Simply start Lemonade by specifying the LM Studio models directory.

## New Platform Support

In addition to Ubuntu and Windows, Lemonade now officially supports Arch, Fedora, and Docker, thanks to community contributions. Official Docker images are available for each release.

## Mobile Companion App

A mobile app has been developed that connects to the Lemonade server, providing a chat interface with VLM support. The app is already available on iOS and will be released on Android soon.

## Configuration Recipes

It is now possible to save model settings (such as the use of ROCm or Vulkan and llama.cpp arguments) to a JSON file, which will be automatically applied the next time the model is loaded.

## Upcoming developments

Support for macOS with llama.cpp+metal, image generation with stablediffusion.cpp, and a "marketplace" of local AI apps are under development.

Lemonade aims to be an open-source alternative to solutions like Ollama and LM Studio, with the goal of growing the ecosystem of local AI applications.

💬 Commenti (0)

🔒 Accedi o registrati per commentare gli articoli.

Nessun commento ancora. Sii il primo a commentare!