GEMM Engines and Accumulator Precision

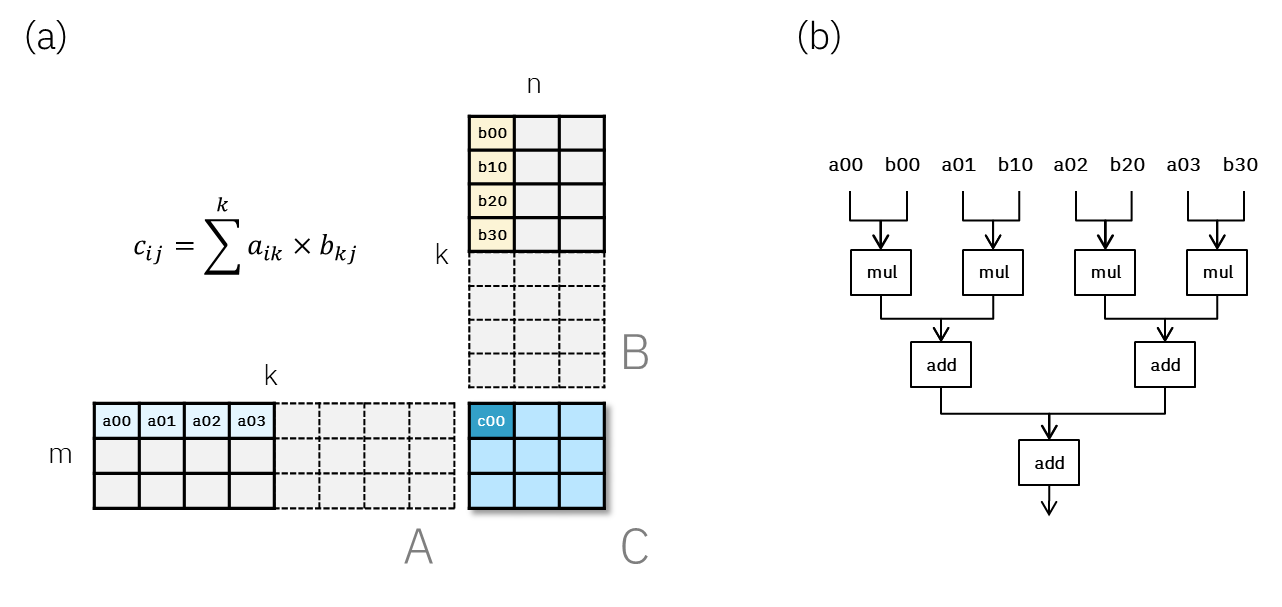

GPUs and custom accelerators integrate specialized compute engines for matrix multiplication, also known as GEMM. These engines efficiently perform matrix multiplication on small tensor blocks. Compilers or libraries divide large matrix multiplication problems into smaller problems, feeding them to these engines.

An often overlooked aspect is that, for hardware efficiency reasons, the FP32 output of a Tensor Core may have fewer than 23 effective mantissa bits. In other words, the precision of the Tensor Core operation is less than FP32. This design choice can affect model accuracy under certain circumstances.

Verifying Precision with Triton

The article describes an approach to investigate accumulator precision using the Triton kernel. The idea is to apply a mask to truncate the last N<sub>trun</sub> bits of the Tensor Core output. By comparing the matrix multiplication output with a reference (no truncation), the accumulator precision can be inferred.

Triton was chosen because it allows the method to be generalized to other accelerators that support it, speeding up development.

Experimental Results

The results show that truncating up to 10 least significant mantissa bits of the output (using H100 FP8 TensorCore) produces the same results as the case without truncation. This suggests that the accumulator is implemented using a special FP22 format (e8m13) for computational efficiency reasons. The same experiment was repeated on an RTX4000 GPU and showed the same behavior.

It is essential to ensure that the TensorCore performing the task is the expected one (FP8). In rare situations, the Triton compiler may choose to use FP16 TensorCore instructions for certain FP8 calculations. The Nvidia profiling tool ncu can be used to inspect the underlying CUDA instructions associated with the Triton tl.dot call.

If matrix multiplication precision is a critical concern, one should try to use an intermediate FP32 accumulation.

Conclusions

Understanding accumulator precision is critical for users with accuracy-sensitive applications who develop custom kernels, as well as for hardware designers who need to emulate this behavior for their next-generation designs. The Triton-kernel-based approach can be combined with the PyTorch ecosystem, extending the same technique to other existing and future accelerators that support the Triton language.

💬 Commenti (0)

🔒 Accedi o registrati per commentare gli articoli.

Nessun commento ancora. Sii il primo a commentare!