The Challenge of Local LLM Development

A user, equipped with a 5090 GPU, shared their experience in local development with large language models (LLMs). After successfully experimenting with cloud-based IDEs like Cursor and Claude, they encountered cost limitations and concerns regarding the privacy of sensitive data.

Hardware Setup and Disappointing Results

The user then opted for a local solution, configuring VS Code with Ollama and large models. However, the results were disappointing. The models seem to struggle to fully leverage the local environment and fail to use external tools to enrich the context of tasks.

Questions and Future Perspectives

The user questions the actual feasibility of local development with models of this size. They wonder if this difficulty is inherent or if there are specific tools and configurations to improve performance. The goal is to create an environment where models, while limited in size, can access up-to-date information and perform tasks effectively, approaching the capabilities of cloud IDEs.

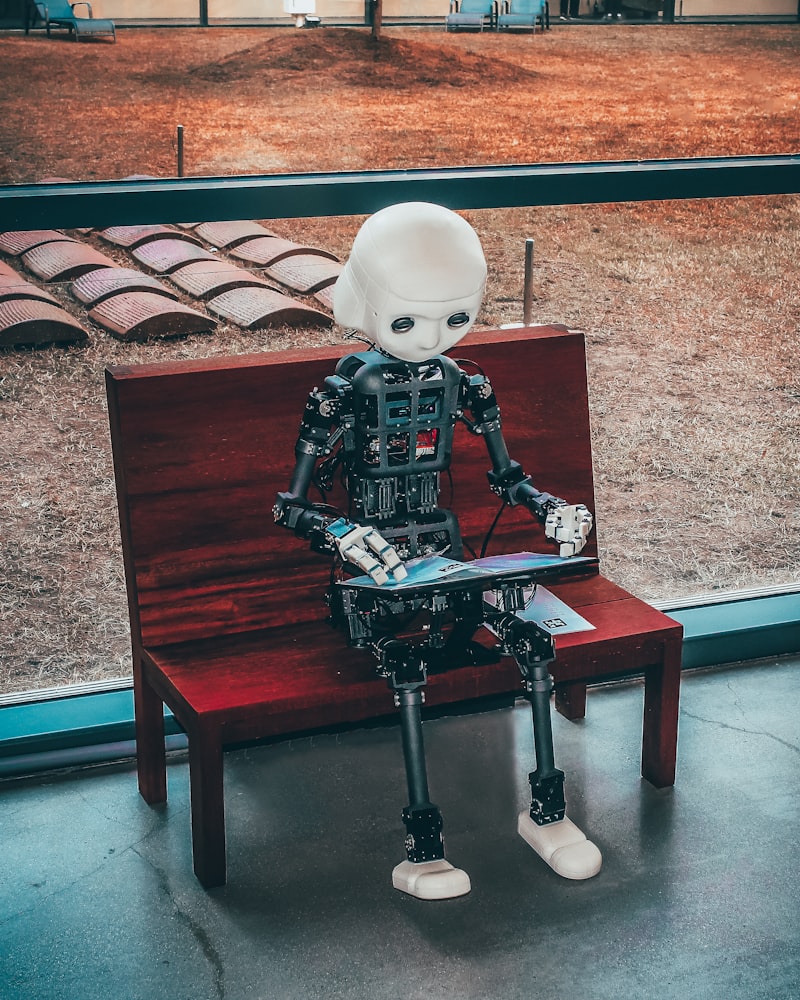

The Context: The Importance of AI Edge

Local development of artificial intelligence applications, often referred to as "AI edge," is becoming increasingly relevant. The ability to run models directly on users' devices offers advantages in terms of latency, privacy, and reliability, reducing dependence on stable internet connections and remote servers. However, as highlighted by the user's experience, optimizing the performance of these models in local environments presents a significant challenge.

💬 Commenti (0)

🔒 Accedi o registrati per commentare gli articoli.

Nessun commento ancora. Sii il primo a commentare!