The 27-Minute War: A Tale of Two Egos (and Their AIs)

By AI-Radar Editor

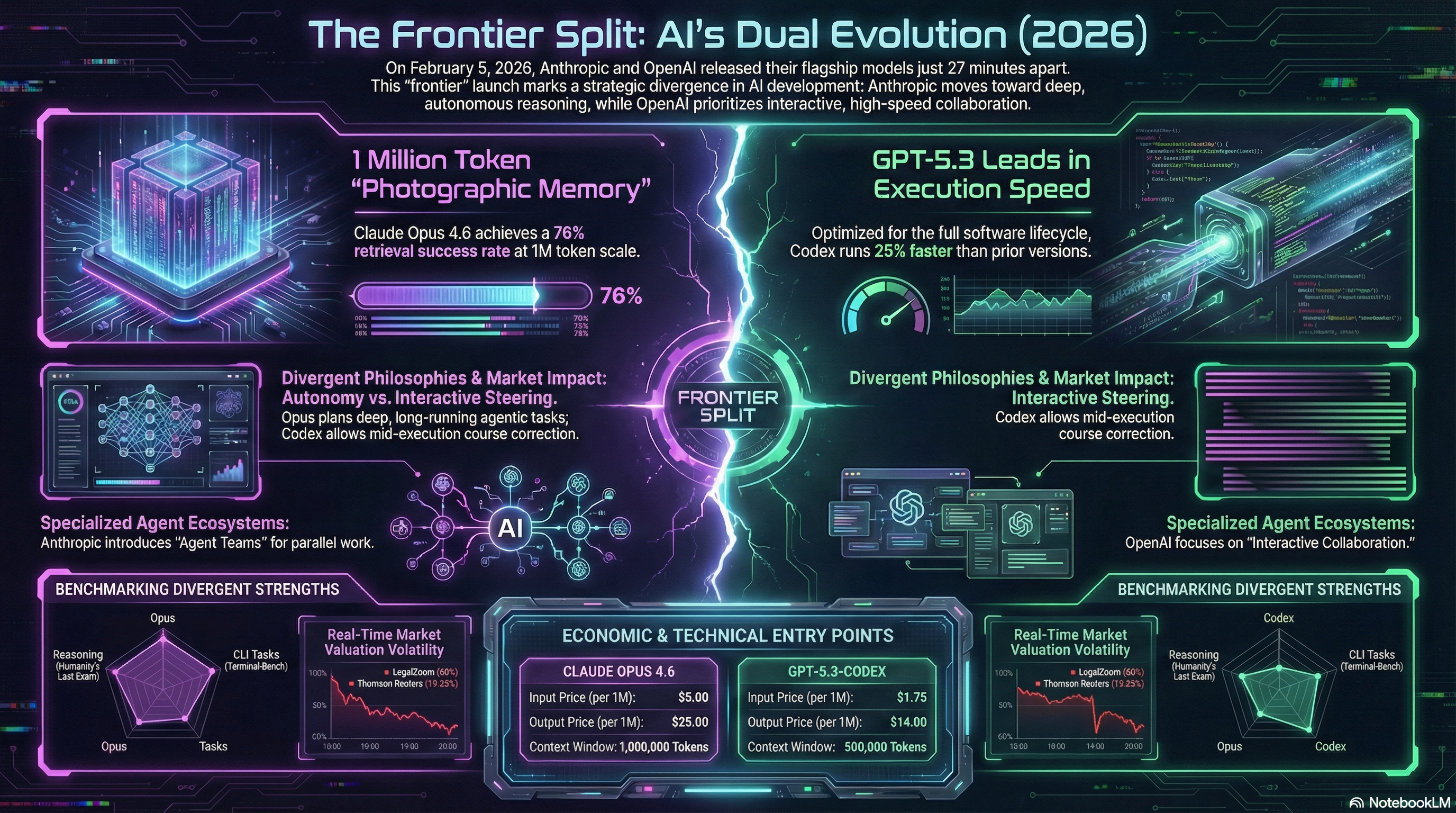

If you needed definitive proof that the path to Artificial General Intelligence (AGI) is paved with petty corporate one-upmanship, look no further than February 5, 2026. In a move that screams "coordinated coincidence," Anthropic and OpenAI released their flagship models—Claude Opus 4.6 and GPT-5.3-Codex—exactly 27 minutes apart.

It is the tech equivalent of a synchronized swimming routine performed by two sharks. While the timing suggests a immature rivalry, the products themselves suggest a philosophical divergence that is splitting the developer community into two camps: the "Deep Thinkers" and the "Fast Movers."

Here is our analysis of the carnage, complete with metrics, vibes, and a verdict on which subscription will hurt your wallet less.

--------------------------------------------------------------------------------

The Contenders

Claude Opus 4.6: The "Deep Thinker" (and the Deep Pocket) Anthropic’s latest offering is positioned as the thoughtful architect. It boasts a staggering 1 million token context window (dubbed "photographic memory" by the marketing department). It features "Adaptive Thinking," which allows the model to pause and ponder complex problems rather than hallucinating an answer immediately.

• The Vibe: That senior engineer who stares out the window for three hours, then writes ten lines of code that fixes the entire system.

• The Cost: A steep $100/month for the Max plan, causing a minor class war in developer subreddits.

GPT-5.3-Codex: The "Hyperactive Intern" OpenAI’s counter-punch is built for speed and obedience. It claims to be 25% faster than its predecessor and is marketed as an "interactive collaborator" that you can steer mid-task. In a twist of digital narcissism, the Codex team claims the model was "instrumental in creating itself," having used early versions to debug its own training data.

• The Vibe: The 10x developer who drinks five Red Bulls, closes 20 tickets before lunch, but leaves the documentation blank.

• The Cost: A palatable $20/month, effectively subsidizing the world’s code production.

--------------------------------------------------------------------------------

The Metrics: Benchmarking the egos

If we look at the raw numbers, the split becomes obvious. They are winning, but at different sports.

• Coding & Agents: OpenAI takes the crown here. GPT-5.3-Codex scored 77.3% on Terminal-Bench 2.0, leaving Opus 4.6 trailing at 65.4%. If you need an agent to bash through terminal commands, Codex is your weapon.

• Reasoning & "Humanity": Opus 4.6 secured victory on "Humanity’s Last Exam" (a benchmark name that definitely doesn't sound ominous) with 53.1%, and dominates complex reasoning tasks like GDPval-AA.

• The "Swiftagon" Real-World Test: In a head-to-head battle debugging a macOS Swift app, Codex finished in 4 minutes, while Opus took 10 minutes. However, Opus found a critical "double-release" concurrency bug that Codex rated as low priority.

--------------------------------------------------------------------------------

Pros and Cons: A Study in Trade-offs

Claude Opus 4.6

• Pros:

◦ Depth: It catches architectural flaws others miss. In a "Swiftagon" stress test, it identified edge cases in state machines that the human developer hadn't even considered.

◦ Aesthetics: When asked to build a Blackjack game, Opus created a lush green casino felt interface. It has "taste".

◦ Honesty: It has been observed self-correcting its own logic mid-output, downgrading a bug severity from HIGH to MEDIUM after reasoning through it.

• Cons:

◦ Price: The $80/month gap between Opus and Codex is hard to swallow for freelancers.

◦ Overthinking: Users report it can sometimes "hallucinate flags" or over-complicate simple tasks.

GPT-5.3-Codex

• Pros:

◦ Speed: It is blazingly fast. For quick sanity checks before a Pull Request, it provides "80% of the value in 40% of the time".

◦ Ecosystem: Native integration with GitHub and Microsoft tools (VS Code) makes it the default for enterprise workflows.

◦ Steerability: You can interrupt it while it's "thinking" to correct its course, a feature that micromanagers will adore.

• Cons:

◦ Boring UI: In the same Blackjack test, Codex produced a static, "soulless" HTML page. It does the job, but it doesn't spark joy.

◦ Shallow Analysis: It missed the deep resource-teardown bug in the Swift test, proving that speed sometimes kills.

--------------------------------------------------------------------------------

The Verdict

The rivalry between Anthropic and OpenAI has inadvertently created a fragmented reality where no single model is sufficient.

If you are a CTO or Architect dealing with legacy spaghetti code, Claude Opus 4.6 is your expensive consultant. It will read your entire 1-million-token documentation, find the one line that will crash the server in 2028, and explain why efficiently.

If you are a Senior Developer trying to clear a backlog of Jira tickets before the weekend, GPT-5.3-Codex is your force multiplier. It is fast, cheap, and surprisingly competent at doing exactly what it's told—even if it helped build itself and might eventually decide it doesn't need you.

The Ironic Conclusion: The community consensus is, unfortunately, the most expensive one: Use Both. The "pro move" identified by users is to have Claude Opus plan the architecture and find deep bugs, then have Codex execute the code and run the tests.

I'm testing Opus 4.6 in reviewing and fixing one of my projects named Shopfloor-Copilot, i will introduce in the near future. I' have to say that the impressions are extremely positive compared to Clause Sonnet 4.5 (Mr Obvious here?). Not a big gap with Opus 4.5. Next week I'll show in details what are my impressions playing with both in VS Code.

BTW Congratulations to Anthropic and OpenAI. By releasing 27 minutes apart, you didn't force us to choose. You forced us to buy two subscriptions.

💬 Commenti (0)

🔒 Accedi o registrati per commentare gli articoli.

Nessun commento ancora. Sii il primo a commentare!