📁 Frameworks

AI generated

AMD Making It Easier To Install vLLM For ROCm

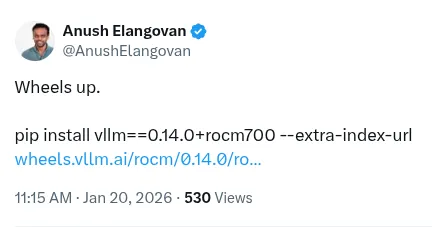

AMD has announced a new way to simplify the installation of vLLM (a library for large language model inference and serving) on AMD Radeon and Instinct hardware via ROCm.

## Simplified Installation

Traditionally, using vLLM on AMD hardware required either compiling the source code manually or using Docker containers provided by AMD. Now, a Python wheel is available that allows for a more direct installation, without the need for Docker. This should make it easier for developers to leverage AMD GPUs for artificial intelligence applications.

## General Context

Large language model (LLM) inference is a rapidly growing field, with a strong demand for efficient hardware solutions. The ability to easily install and configure libraries like vLLM is crucial to enabling developers to focus on developing innovative applications, rather than managing complex installation procedures.

💬 Commenti (0)

🔒 Accedi o registrati per commentare gli articoli.

Nessun commento ancora. Sii il primo a commentare!