Effective context management is crucial for AI agents, especially as task lengths increase. Deep Agents, LangChain's open source SDK, provides an environment for developing agents capable of planning, spawning sub-agents, and interacting with a filesystem to perform complex tasks.

Context Compression Techniques

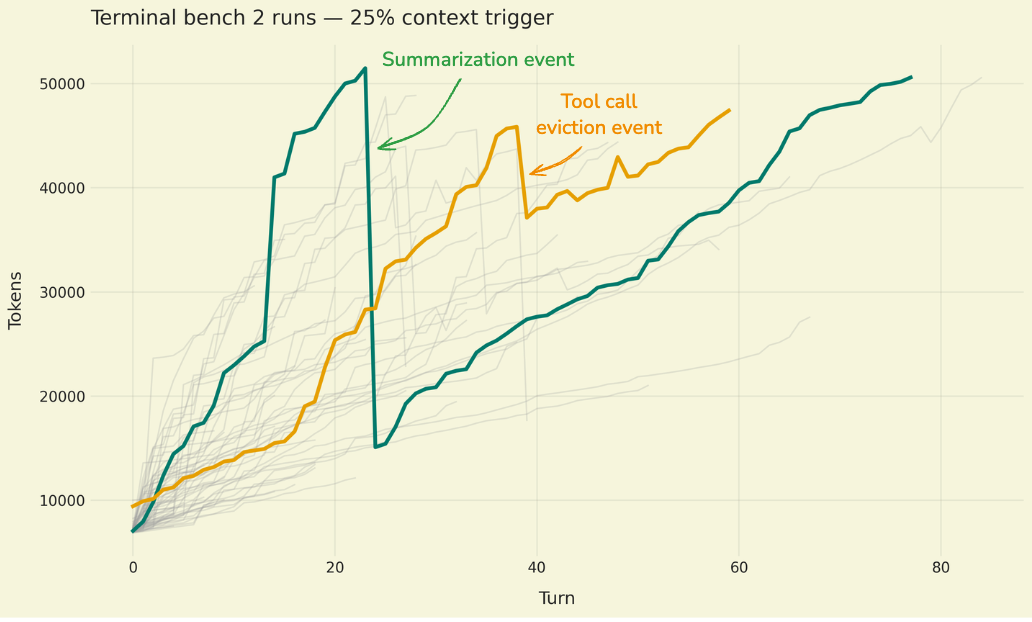

Deep Agents implements several compression techniques to manage model context window limits:

- Offloading large tool results: Tool responses exceeding 20,000 tokens are saved to the filesystem, replaced in the context with a file reference and a preview.

- Offloading large tool inputs: Tool arguments for file write/edit operations are truncated and replaced with a file pointer when the context exceeds 85% of the available window.

- Summarization: When offloading is not enough, a structured summary of the conversation (session intent, artifacts created, next steps) is generated, replacing the complete history. The original history is still saved to the filesystem.

Evaluating Strategies

To evaluate the effectiveness of compression strategies, Deep Agents suggests:

- Start with real-world benchmarks, then stress-test individual features.

- Test the recoverability of compressed information.

- Monitor for goal drift by the agent.

The SDK includes targeted evaluations to isolate and validate context management mechanisms, verifying, for example, that the agent maintains its objective after summarization and that it is able to recover previously compressed information through filesystem search. These evaluations act as integration tests, reducing iteration times and facilitating the attribution of failures to specific compression mechanisms.

💬 Commenti (0)

🔒 Accedi o registrati per commentare gli articoli.

Nessun commento ancora. Sii il primo a commentare!