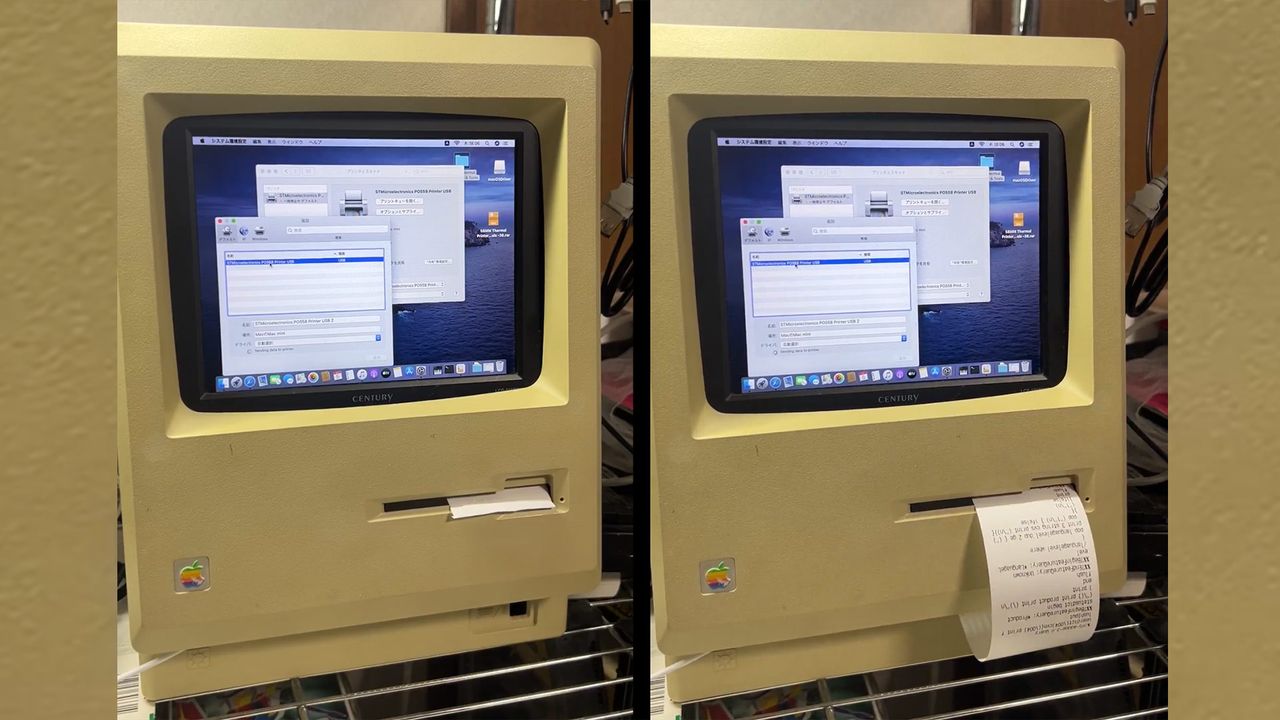

Optimizations in progress for llama.cpp

A user reported on Reddit ongoing activity on GitHub related to improvements for llama.cpp, a framework for large language model inference. Specific details of the improvements are not provided, but the activity suggests active development of the project.